Impact

Strengthen Economic Resilience Through Digital Transformation

The successful digitalization of the economy and infrastructure is of crucial importance to ensure that Germany and Europe are ready for the future. For this reason, the German Recovery and Resilience Plan (GRRP) lays the foundation for starting the IPCEI-CIS and subsequently the Apeiro project.

The two components that are in quantitative terms most important are “data as the raw material of the future” and the promotion of the digital transition of the economy. The aim of the IPCEI-CIS according to the GRRP is to create the basis for a sovereign, highly scalable edge cloud infrastructure in Europe. The infrastructure will build on highly innovative, real-time-capable structures distributed across Europe and will be operated in a way that is highly efficient and will save energy.

Apeiro concepts and components have a significant impact on the GRRP´s goals.

Sustainability

Cloud computing, despite its numerous benefits, presents significant implications for energy consumption. The vast data centers that power cloud services require immense amounts of electricity to operate and cool their servers. As more businesses and individuals adopt cloud computing, the energy demand for these data centers continues to rise, contributing to increased greenhouse gas emissions and carbon footprints. Moreover, the constant data transfer and storage also consume considerable energy, further exacerbating the environmental impact. One of the key challenges in mitigating this impact is the difficulty in accurately measuring and achieving transparency in energy consumption. Many cloud providers do not disclose detailed energy usage data, making it hard for consumers to assess the environmental impact of their cloud services. Additionally, the complex and dynamic nature of cloud workloads makes precise energy measurement challenging. Improving measurement techniques and increasing transparency are crucial steps towards understanding and reducing the energy consumption associated with cloud computing. This would not only help in optimizing energy use but also enable more informed decision-making for both cloud providers and consumers aiming to minimize their environmental footprint.

Apeiro is dedicated to pioneering sustainable operations across the cloud edge continuum by integrating advanced technologies, standards, and operational transparency.

The following key aspects highlight how Apeiro plans to explore, research, and contribute to a more sustainable digital infrastructure by improving transparency in energy consumption and providing solutions which reduce the carbon footprint:

-

Digital Twin-Enabled Data Centers: Working towards the immersive data center based on digital twins to visualize energy flows and optimize data center operations, supporting initiatives to reduce energy waste and improve efficiency, starting in the construction phase of the data center and all the way throughout daily operations.

-

Green Operations Platform: Building the foundation with a metric Data Lake that captures and analyzes energy data, providing actionable insights for ongoing sustainability initiatives.

-

Energy-Transparent Scheduling: By providing transparent (energy-)cost scheduling options, Apeiro allows for informed workload placement that optimizes energy consumption across multiple providers.

-

Server Power Automation: Introducing server-specific actuators for dynamic switching of server states based on demand, ensuring that energy is only consumed when necessary and reducing idle power draw.

-

Reference Architectures for Efficiency: Advancing energy- and cost-optimized data center reference blueprints, enabling scalable adoption of best practices in sustainable cloud-edge design.

Apeiro tackles rising energy demands, lack of transparency in multi-provider environments, and the complexity of optimizing distributed workloads for sustainability. By focusing on interoperability, visualization, and automated energy management, Apeiro empowers operators to meet environmental targets without sacrificing performance or scalability.

Sovereignty

Cloud infrastructures increasingly face conflicting political, regulatory, or economic interests. Cloud providers and users are therefore well advised to increase their resilience and pursue a de-risking approach.

To ensure a sovereign cloud infrastructure, Apeiro provides for an open and standards following blueprint. Sovereign cloud infrastructures offer a compelling solution for organizations seeking to balance data sovereignty, security, and compliance with the flexibility and scalability of cloud services. Particularly in times where data privacy and regulatory requirements are increasingly stringent, the Apeiro blueprint can be a good basis for any organization looking into establishing a sovereign cloud native infrastructure. Building on top of Apeiro helps controlling cost through the consistent use of open source and reducing complexity by applying standardized methodologies across the complete stack.

The benefits of a sovereign cloud infrastructure include these key aspects:

-

Data Sovereignty: Sovereign cloud infrastructure ensures that data is stored and processed within the geographical boundaries of a specific country or region. This compliance with local laws and regulations helps organizations avoid legal complications and ensures that sensitive data is protected according to national standards.

-

Enhanced Security: By utilizing sovereign cloud services, organizations can benefit from enhanced security measures tailored to meet local requirements. This includes robust encryption, access controls, and monitoring systems that are designed to protect data from unauthorized access and cyber threats.

-

Compliance with Regulations: Sovereign cloud providers are often well-versed in local regulations, such as GDPR in Europe. This expertise allows organizations to navigate complex compliance landscapes more effectively, reducing the risk of penalties and legal issues associated with data mishandling.

-

Trust and Transparency: Sovereign cloud infrastructure fosters trust among customers and stakeholders by ensuring transparency in data handling practices. Organizations can provide assurances that their data is managed in accordance with local laws, which can enhance customer confidence and loyalty.

-

Tailored Solutions: Sovereign cloud providers often offer customized solutions that cater to the specific needs of local businesses and industries. This localized approach can lead to better alignment with organizational goals and improved service delivery.

-

Support for Local Economies: By choosing sovereign cloud infrastructure, organizations contribute to the growth of local economies. This investment in domestic technology providers can stimulate job creation and innovation within the region.

-

Reduced Latency: Sovereign cloud infrastructure typically involves data centers located within the same region as the business users. This proximity can lead to reduced latency and improved performance for applications that require real-time data processing.

Artificial Intelligence

Apeiro fuels artificial intelligence innovation at the cloud edge continuum by providing a robust, extensible platform. This includes best practices and architectural flexibility to host AI workloads on an Apeiro environment as well as the use of AI as an integral part of the Apeiro tooling and services for operations of the platform itself.

These aspects illustrate how Apeiro plans to explore, research, and contribute to the advancement of utilization of AI and address contemporary challenges:

-

Agentic Operations: Today, managing the cloud infrastructure effectively requires considerable manual effort from the DevOps engineering team. We aim to increase the level of automation and autonomy in operating Apeiro environments. This allows more efficient operations of distributed cloud edge environments as well as decentralized and potentially air-gapped data centers. As a result, we want to drastically reduce the costs for infrastructure operations and minimize the need for highly specialized employees.

-

AI Extensions: Apeiro establishes an enterprise-ready reference architecture that simplifies the integration of new AI components and services, ensuring modular growth and fast adoption of innovation.

-

Visual and Simulated Decisions: The immersive Data Center facilitates understandable AI by providing visualization and simulation tools for decision processes, increasing transparency and trust.

-

Compliance and Security: With robust packaging, cryptography, and compliance mechanisms, Apeiro streamlines AI-assisted deployment while maintaining high security and regulatory standards.

Apeiro confronts the intricacies of deploying, scaling, and governing AI workloads across heterogeneous, distributed environments. By promoting standardization, transparency, and automation, Apeiro mitigates risks related to interoperability, explainability, and operational complexity, accelerating responsible AI adoption throughout the cloud edge continuum.

Flexibility through standardization

We define a blueprint of documentation and components that can be freely assembled and augmented by adopters to cater for different cloud scenarios. We embrace the diversity of existing environments and put an emphasis on standardization on critical interface layers while at the same time allowing differentiation and specialization where needed. This is supported by offering all components as open source and all interfaces and pluggable services relying on open standards.

This standardization provides huge value for all personas – software builders, and application developers, as well as service and infrastructure providers – on the different layers – Baremetal Operating System, Cloud Operating System, and Data Fabric – that we address with Apeiro. It allows everyone to focus on differentiating capabilities while at the same time leveraging the Apeiro blueprint for commodity functionality.

The overall toolset is rich enough to support different target landscapes, ranging from setting up complete greenfield deployments based on the Apeiro blueprint while at the same time also allowing pick-and-choose for brownfield deployments, hence allowing to leverage existing investments in hardware and software. The setup can scale from resource-optimized edge deployments to enterprise-grade high-performance data centers. On another dimension, we also see different usage patterns for pure in-house scenarios where the cloud infrastructure is operated for local purposes in a sovereign way as well as commercial and public scenarios scaling to federated cloud environments; again, with compatibility through standardization across all these patterns. Depending on the scenario, the blueprint can be used (in combination with any existing assets) to assemble completely self-contained cloud environments with a predefined and well-known set of services, mostly for edge deployments; and on the other side can allow completely open environments for custom workload handling, as long as all participants follow the open standards.

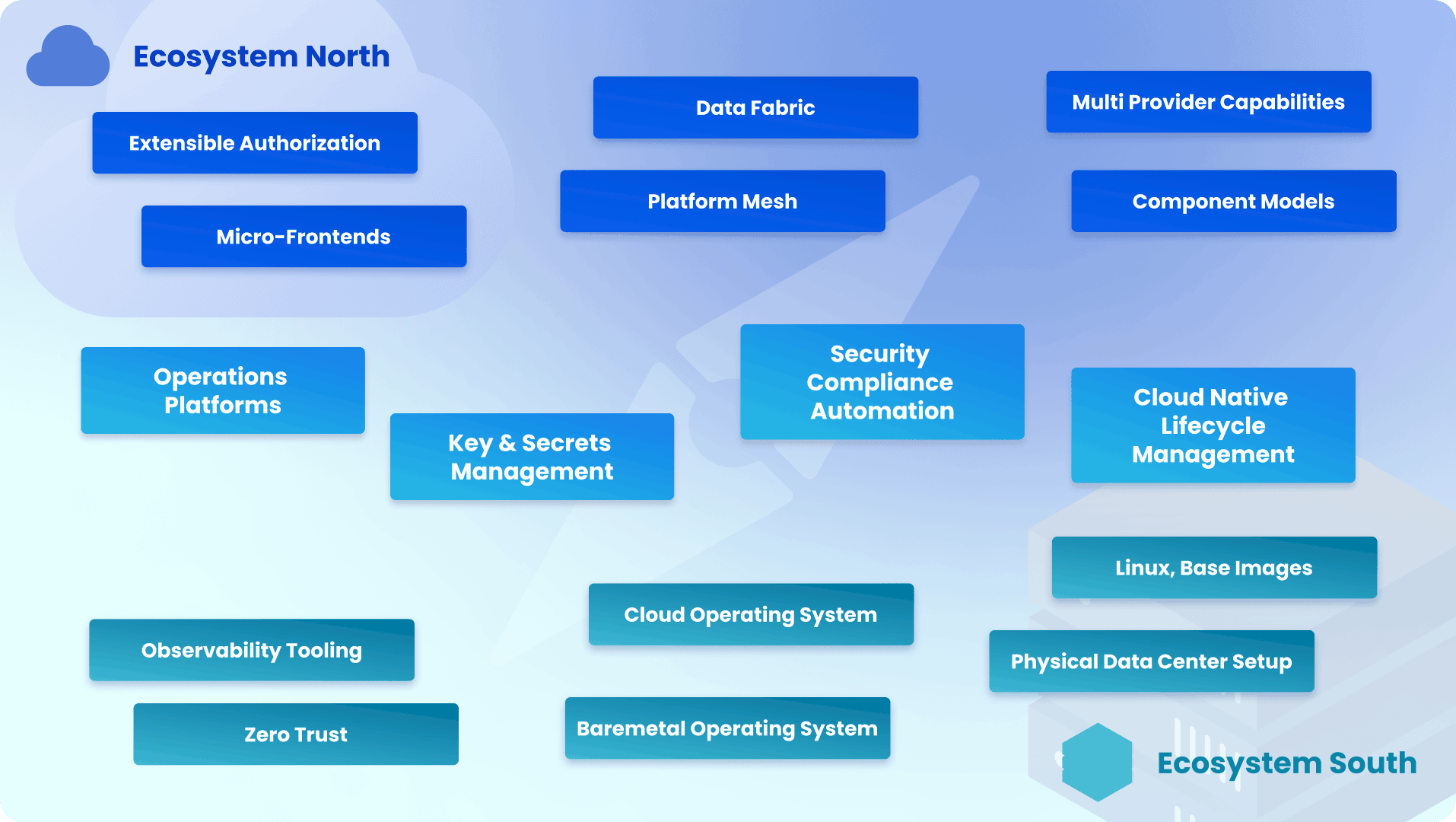

The Apeiro-Reference-Architecture Layers

We initiate and contribute to multiple open-source projects on the different layers of the overall blueprint. Contributions and enhancements of the Apeiro landscape are possible in different ways – by helping to evolve the open-source components that we describe in more detail below and by contributing completely new components that adhere to the standardized interfaces in the overall landscape and hence fit into the overall architecture.

In the detail sections in this area, we describe the projects and components and the value they are providing in the different areas of a cloud environment.